Geometry of Regression

Geometric Interpretation of Linear Regression

A picture is worth a thousand words. This post on stats Stack Exchange gives a great description of the geometric representation of Linear Regression problems. Let’s see this in action using some simple examples.

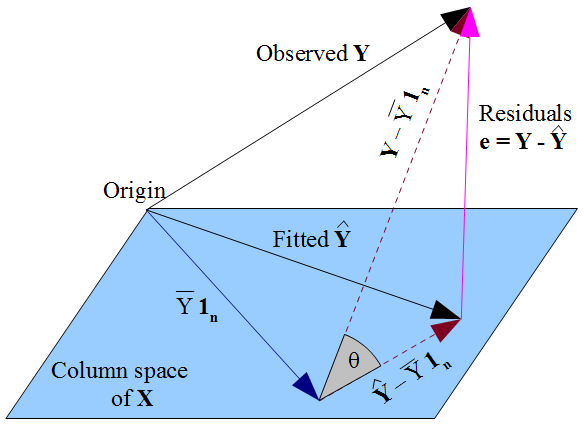

The below graphic, which appeared in the original stack-exchange post, captures the essence of Linear Regression very aptly.

Source: Stack Exchange

Overview

The geometrical meaning of the Linear/Multiple Regression fit is the projection of predicted variable $y$ on $\mathbf{span(1, X)}$ (with constant) or $\mathbf{X}$ (without constant).

In terms of more generally understood form of Linear Regression:

- With Constant: $\hat y = \beta_o + \beta_1 x$

- Without Constant: $\hat y = \beta_1 x$

We will focus on regression with constant.

Regression coefficients represent the factors that make a linear combination of $\mathbb{1}$ and $\mathbf{X}$ i.e. the projection of $y$ in terms of a linear combination of $\mathbb{1}$ and $\mathbf{X}$.

Additionally, $\mathbf{N}$ data points imply an $\mathbf{N}$-dimensional vector for $y$, $\mathbb{1}$, and $\mathbf{X}$. Hence, I will be using only three data points for predictor and predicted variables to restrict ourselves to 3 dimensions. Reader can create the above graphic using the analysis below if they wish.

Analysis

1

2

3

4

5

6

7

import numpy as np

import matplotlib.pyplot as plt

import sympy as sp

%matplotlib inline

sp.init_printing(use_unicode=True)

plt.style.use("ggplot")

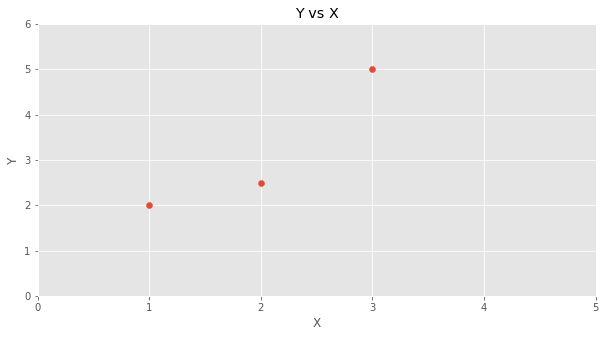

Let’s create our $y$ and $\mathbf{X}$.

1

2

x = np.array([1.0, 2, 3])

y = np.array([2, 2.5, 5])

1

2

Y = sp.Matrix(y)

Y

1

2

X = sp.Matrix(np.transpose([np.ones(len(x)), x]))

X

1

2

3

4

5

6

7

8

9

fig = plt.figure()

plt.scatter(X.col(1), y)

plt.xlim((0, 5))

plt.ylim((0, 6))

plt.title("Y vs X")

plt.xlabel("X")

plt.ylabel("Y")

plt.gcf().set_size_inches(10, 5)

plt.show()

Regression Coefficients

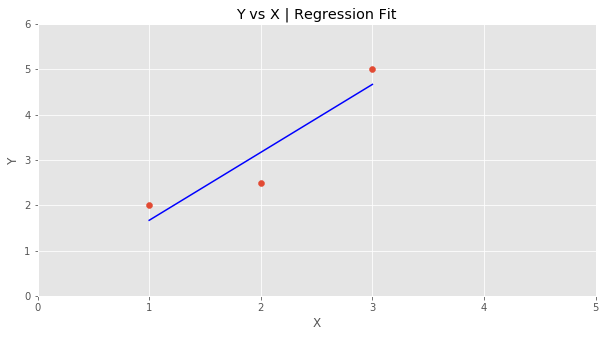

Linear regression coefficients $\beta$ are given by:

\begin{equation} \beta = (\mathbf{X^\intercal} \mathbf{X})^{-1} \mathbf{X^\intercal} y \end{equation}

Let’s calculate $\mathbf{\beta}$ for $\mathbf{X}$ and $y$ we defined above.

1

2

beta = ((X.transpose() * X) ** -1) * X.transpose() * y

beta

Since we now have $\beta$, we can calculate the estimated $y$ or $\hat y$.

\begin{equation} \hat y = \mathbf{X} \beta = \mathbf{X} (\mathbf{X^\intercal} \mathbf{X})^{-1} \mathbf{X^\intercal} y \end{equation}

1

2

y_hat = X * beta

y_hat

1

2

3

4

5

6

7

8

9

10

fig = plt.figure()

plt.scatter(x, y)

plt.xlim((0, 5))

plt.ylim((0, 6))

plt.title("Y vs X | Regression Fit")

plt.xlabel("X")

plt.ylabel("Y")

plt.plot(X.col(1), y_hat, color='blue')

plt.gcf().set_size_inches(10, 5)

plt.show()

Error Analysis

Residuals for the model are given by: $\epsilon$ = $\hat y$ - $y$. This represents the error in predicted values of $y$ using both $\mathbb{1}$ and $\mathbf{X}$ in the model. The error vector is normal to the $\mathbf{span(1, X)}$ since it represents the component of $y$ that is not in $\mathbf{span(1, X)}$.

1

2

res = y - y_hat

res

Average vector or $\bar y$ is geometrically the projection of $y$ on just the $\mathbb{1}$ vector.

1

2

y_bar = np.mean(y) * sp.Matrix(np.ones(len(y)))

y_bar

We can calculate the error in the average model or where we represent the predicted values as the average vector $\bar y$. Error in the model is given by $\kappa$ = $\bar y$ - $y$.

1

2

kappa = y_bar - y

kappa

Both $\bar y$ and $\hat y$ are predictors for $y$ and it is reasonable to calculate how much error we reduce by adding $\mathbf{X}$ to the model. Let’s call the error $\eta$

1

2

eta = y_hat - y_bar

eta

Now from here we can prove whether $\eta$ and $\epsilon$ are perpendicular to each other. We can check it by calculating their dot product.

1

2

dot_product = eta.transpose() * res

dot_product

Hence, we can see that $\eta$ and $\epsilon$ are normal to each other since their dot product is 0

From here we can also prove the relationship between Total Sum of Squares (SST), Sum of Squares due to Squares of Regression (SSR) and Sum of Squares due to Squares of Errors (SSE)

$\mathbf{SST} = \mathbf{SSR} + \mathbf{SSE}$

- $\mathbf{SST}$ can be represented by the squared norm of $\kappa$

- $\mathbf{SSR}$ can be represented by the squared norm of $\eta$

- $\mathbf{SSE}$ can be represented by the squared norm of $\epsilon$

We can use Pythagorean Theorem to check the above relationship i.e. \begin{equation} ||\kappa||^2 = ||\eta||^2 + ||\epsilon||^2 \end{equation}

1

kappa.norm() ** 2 - eta.norm() ** 2 - res.norm() ** 2

Hence, as we expected, $\kappa$, $\eta$ and $\epsilon$ form a right angled triangle.

Summary

Through this post, I demonstrated how we can interpret linear/multiple regression geometrically.

Also, I solved a linear regression model using Linear Algebra.