Exploring GPT2 (Part 2)

Benchmarking our own GPT2 model against Huggingface GPT2 model

In the previous post, we explored the GPT2 model and how to generate text using it. In this post, we will benchmark our own GPT2 model against the Huggingface GPT2 model.

Hardware specs

I have a RTX 4090 GPU with 24GB of VRAM on a linux desktop running Ubuntu 22.04. Here is the output of nvidia-smi:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

$ nvidia-smi

Wed Jul 31 07:38:40 2024

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.183.01 Driver Version: 535.183.01 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 4090 Off | 00000000:01:00.0 Off | Off |

| 0% 37C P8 21W / 450W | 355MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1949 G /usr/lib/xorg/Xorg 205MiB |

| 0 N/A N/A 2170 G /usr/bin/gnome-shell 16MiB |

| 0 N/A N/A 3823 G ...seed-version=20240725-094838.558000 42MiB |

| 0 N/A N/A 7258 G ...erProcess --variations-seed-version 57MiB |

+---------------------------------------------------------------------------------------+

Code

All code can be found in my Github repository. To simplify the benchmarking, I created a small script - benchmark.py:

- We will use tokens/s as the metric to measure the performance of different versions of the model. The metric should be agnostic to the batch_size we use.

- We will use the text we used to play around with GPT2 model in the previous post i.e. 1984 text.

- I did a small test on what is the maximum batch_size I can fit into the GPU and found

batch_size = 8being the ideal candidate that fits both models.

Pytorch Eager

Let’s start with the baseline model - Huggingface GPT2 model.

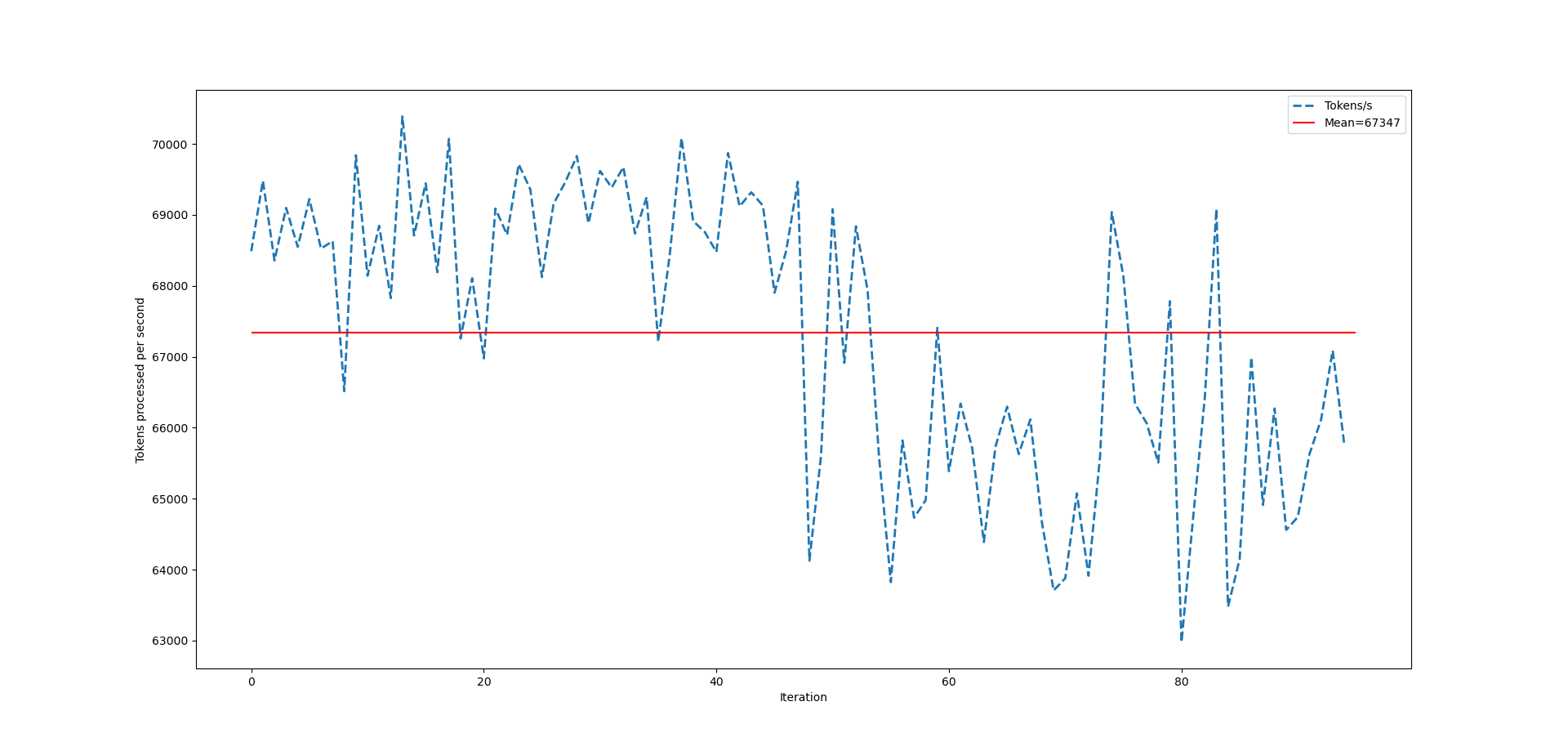

Huggingface GPT2

1

2

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --use-hf

# Mean tokens processed per second: 67347

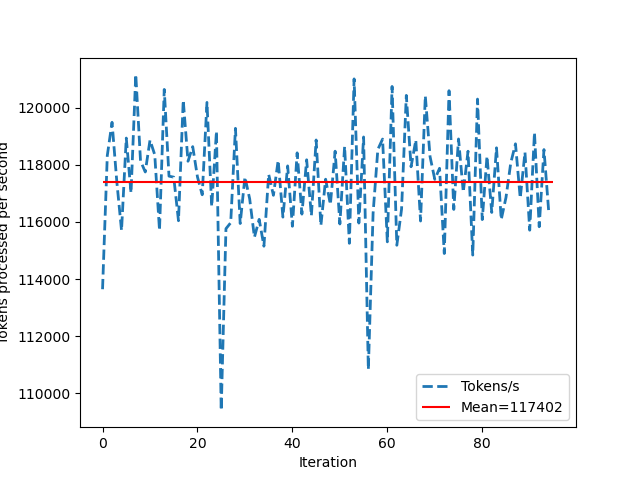

Our GPT2

Now, let’s check the GPT2 model that we created from scratch.

1

2

CUDA_LAUNCH_BLOCKING=1 python benchmark.py

# Mean tokens processed per second: 117402

| Model | Tokens/s | Perf |

|---|---|---|

| Baseline (HF GPT2) | 67347 | - |

| Our GPT2 | 117402 | +74% |

NICE! Our Eager GPT2 model is 74% faster than the Huggingface GPT2 model.

Torch compile

So far we just tested the eager models. torch.compile promises us a better performance post compilation so let’s try that.

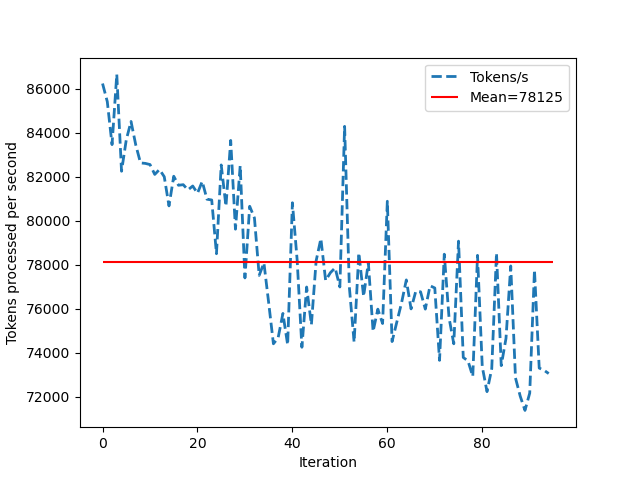

Huggingface GPT2

1

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --use-hf --torch-compile

1

2

...

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 384.00 MiB. GPU

I LIED!. batch_size=8 is too large for the Huggingface GPT2 model. I had to reduce it to batch_size=4 to fit the model into the GPU.

1

2

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --use-hf --torch-compile --batch-size=4

# Mean tokens processed per second: 78125

We got a performance boost of 16% by using torch.compile. Let’s see how our GPT2 model performs.

1

2

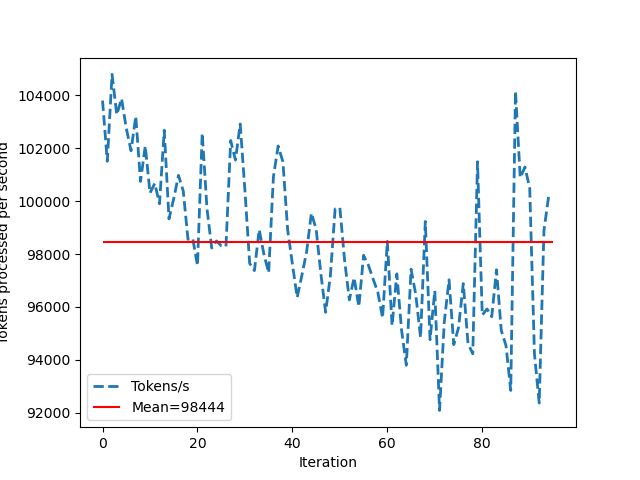

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --torch-compile

# Mean tokens processed per second: 98444

That’s interesting! We got worse performance with the compiled model than we did with the eager model. Although, it is still faster than eager or compiled Huggingface GPT2 model.

Let’s summarize the results so far:

| Model | Tokens/s | Perf |

|---|---|---|

| Baseline (HF GPT2) | 67347 | - |

| Our GPT2 | 117402 | +74% |

| HF GPT2 Compiled | 78125 | +16% |

| Our GPT2 Compiled | 98444 | +46% |

Tensor Cores

Although, I was going to do this anyway but during the compilation process, I got a giant warning that I am not using Tensor Cores. So, let’s enable that and see if we get any performance boost.

/home/ksharma/anaconda3/lib/python3.11/site-packages/torch/_inductor/compile_fx.py:124: UserWarning: TensorFloat32 tensor cores for float32 matrix multiplication available but not enabled. Consider setting

torch.set_float32_matmul_precision('high')for better performance.

1

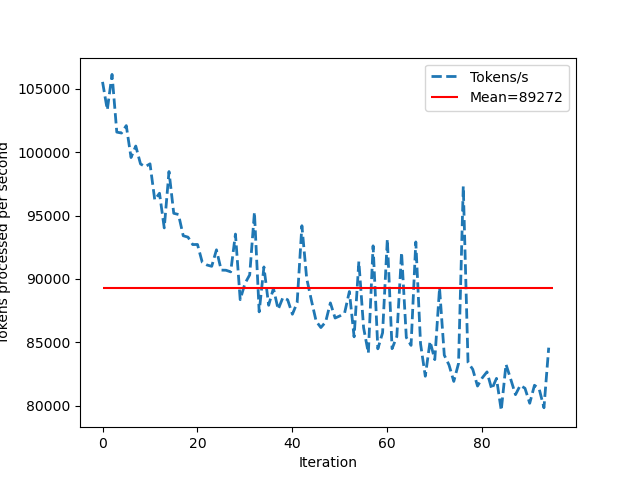

2

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --use-hf --torch-compile --use-tensorcores --batch-size=4

# Mean tokens processed per second: 89272

Tensor cores gave a performance boost of another ~17% to the Huggingface GPT2 model. Let’s see how our GPT2 model performs.

1

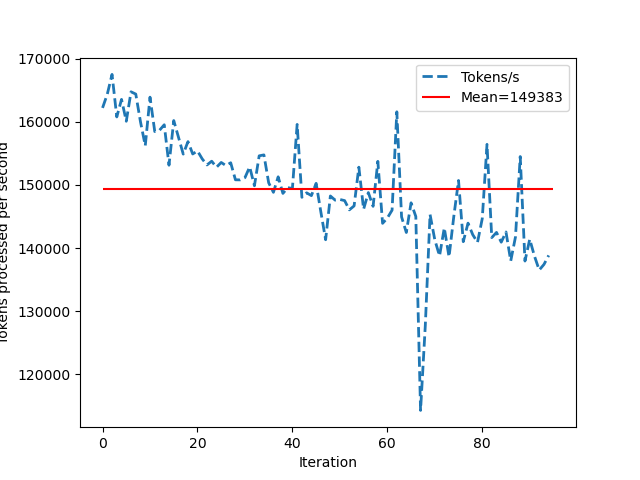

2

CUDA_LAUNCH_BLOCKING=1 python benchmark.py --torch-compile --use-tensorcores

# Mean tokens processed per second: 149383

Enabling Tensor Cores gave a performance boost of 51% to our GPT2 model w.r.t to the compiled model and 27% w.r.t to the eager model.

Summary

Here is the summary of the results:

| Model | Tokens/s | Perf |

|---|---|---|

| Baseline (HF GPT2) | 67347 | - |

| Our GPT2 | 117402 | +74% |

| HF GPT2 Compiled | 78125 | +16% |

| Our GPT2 Compiled | 98444 | +46% |

| HF GPT2 Compiled (with Tensor Cores) | 89272 | +33% |

| Our GPT2 Compiled (with Tensor Cores) | 149383 | +122% |

Why is our GPT2 model faster?

I looked further into huggingface documentation and found that there is an option to pass attn_implementation="flash_attention_2" to pretrained model to use flash attention. The option is not enabled by default.

I tried to enable it and got a nice error message:

ValueError: GPT2LMHeadModel does not support Flash Attention 2.0 yet. Please request to add support where the model is hosted, on its model hub page: https://huggingface.co/gpt2/discussions/new or in the Transformers GitHub repo: https://github.com/huggingface

So, this is where the story ends! It was fun exploring and benchmarking our GPT2 model against the Huggingface GPT2 model.

Comments powered by Disqus.